Autograding Primer for CS – zyBooks Guide

Autograding is a must for computer science education, and for good reason.

For instructors, it dramatically reduces the time spent manually grading assignments, so they can focus on analyzing their student’s performance and giving them meaningful feedback. For students, it helps them learn as they go, at exactly the right moment (see below). And autograding does all of this at scale. Think 400+ student CS1 course.

In this post, I’ll help you supercharge your autograding efforts. I’ll share insights on how to use autograding to improve how you teach and how your students learn. And I’ll walk you through the three most important autograding methodologies and how to use them.

Let’s get to it.

In this post

Autograding and the data challenge

Autograding benefits for students

Autograding and the data challenge

I think we can agree that the issue with data is not that we don’t have enough of it. We test the heck out of students. We give them tons of assessments. We give them tons of tasks to complete.

The challenge is making sense of this growing mountain of data, quickly, so you can have actionable information right now, not tomorrow or next week. This is where autograding comes in.

When you auto-grade assessments you automatically see scores, analytics, even how much time your students are spending on tasks. Where are students succeeding? Where are they struggling? You can see all this instantly as you’re getting ready for class.

Point of intervention is… now

The formative assessment data you gather with autograding gives you the power to adjust your teaching at the ideal point of intervention. Maybe you’ve covered functions, but you see the class is still struggling with it. So you can go over functions again in your next class. This way you’ll avoid some unpleasant summative surprises.

Autograding also lets you drill down to the individual student level. You can see, hey, these two students are really falling behind, and this student never turned in their work. So you can also intervene with individual students right now, before they fall behind.

Autograding benefits for students

Autograding isn’t just the gift that keeps on giving for instructors. It’s a game-changer for students, helping them improve their understanding of the material as they learn. Actually, this is my favorite part of autograding.

For many students, computer science is a brand new subject. From kindergarten to their university experience, they’ve been writing, they’ve been doing math. So they have a grounding in those subjects, even when encountering new stuff. But CS? It’s often a new and exciting adventure.

Instant feedback confidence builder

Auto-graded assessments to the rescue!

Autograding provides students with instant feedback on their work as they’re doing it. They can check if a piece of code is correct, or not. The auto-grader can also give students actionable instructions as they’re tackling an assignment. That’s the best kind of instant gratification – the one that helps them succeed in class.

Instant feedback builds confidence as students progress. They don’t have to wait for you to return a grade to know if they’re understanding the material. In fact, for formative assessment pieces, instructors will often allow students to submit as many times as they need to, to get the code right. By reworking their submissions, students really learn how to solve problems.

Students will gain confidence about the work they’re doing, the work they’re submitting, and the grade they’re going to get in the course. And students will learn they can persist and push through anything you assign them.

When to use autograding?

Okay, we’ve established how autograding helps both instructors and students. So when to use autograding? (And when not to use autograding? More on that shortly.)

You definitely want to replace tedious and time-consuming tasks with autograding. You can and should automate the checking of all reproducible values, returned values and predictable functionality of your students’ code. The key to success here is providing clear instructions with detailed expectations about what your students’ code should accomplish. Make sure they follow reproducibility guidelines that you set, so their code isn’t fighting with the defined test cases.

When not to use autograding?

Autograding isn’t for everything. And it certainly doesn’t replace the instructor. You’ll need to manually review at least some aspects of a coding assignment, like checking for good variable names, readability and design. And more advanced creative coding projects (like those in upper level CS courses) may have too much variability from student to student to auto-grade them completely.

That said, however, most projects have at least some reproducible elements that can in fact be auto-graded. Consider allocating some points of an assignment to automatic checks, and others to manual checks. This way, you and your students will get the best of both worlds – you’ll save time, and they’ll receive meaningful feedback instantly while they’re working on the assignment.

One final note: With the hypersonic advances in generative AI tools like ChatGPT, we may soon be able to give students full automated feedback on even the advanced creative coding projects. Stay tuned!

Types of autograding

Now that we have a handle on the “why” of autograding, let’s dive into the “how.” Here are the three primary methodologies:

Three types of autograding

- Compare output tests

- Unit tests

- Custom script tests

Compare output tests

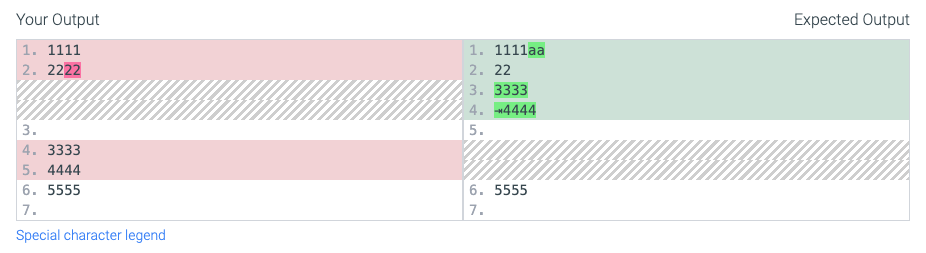

The most basic autograding approach is to use a simple compare output test. Compare output tests run a student’s code and compare the code’s output to an expected output you provide. Typically, results are shown to students in the form of a “diff” so they can see the differences in their code’s output versus the expected output.

Example of a compare output test

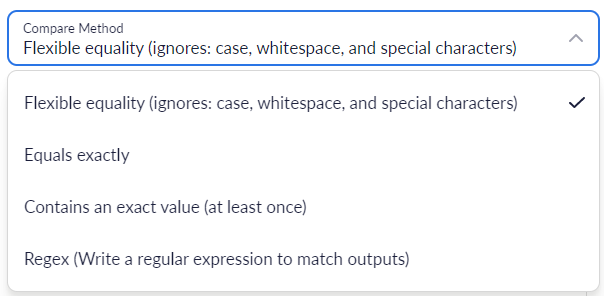

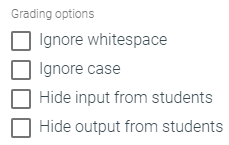

Of course, you may not always want to compare student code output exactly with the expected output. Whitespace and case, for example, are usually not important factors for student code correctness. So most autograding solutions have ways for you to customize how the comparison is done. With many applications, you can even provide your own regular expression to match the outputs.

Customizing compare methods

Some programs may wait for user input, so typically autograding systems will have a way for you to provide lines of input to feed to the student’s code when it is run. It’s common to create different test cases with different input values to check that the student’s code is producing the correct output based on the input it is given.

Unit tests

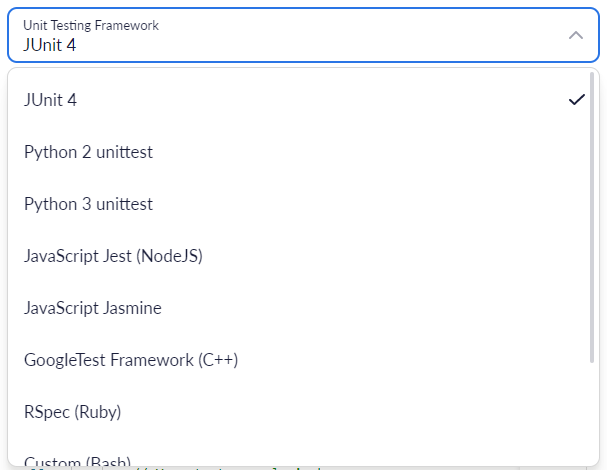

Output comparisons are a great first step in autograding student code, but to test more advanced projects you need to do unit tests. Unit tests help you test “units,” or modular components of code, to “assert” that they meet certain conditions. For example, you can unit test specific functions/methods, classes, files, or other modular components of a project, but not the project as a whole. There are specific unit testing libraries designed to help you do this in different programming languages. Python has a built-in testing library called unittest, while Java has a popular framework called JUnit.

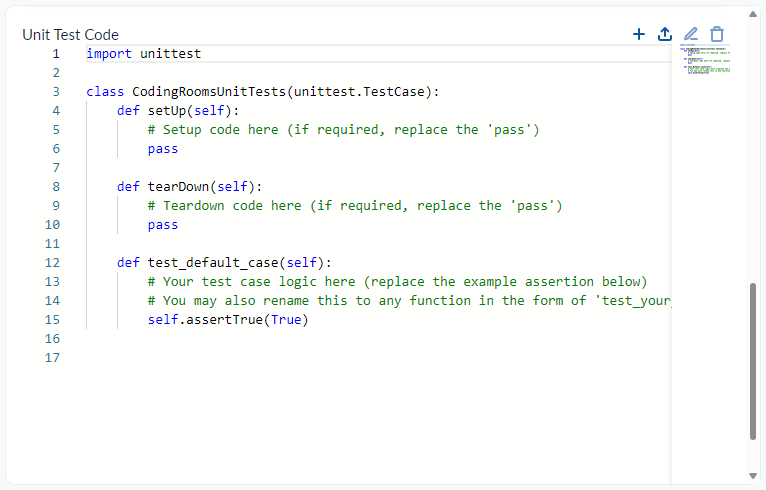

Example of a Python unittest

A typical unit test allows you to define code you want to run before and after each test (setUp and tearDown, seen in Python example above), as well as the code you want to run to perform the test. Each test needs to end with an assertion – a condition that determines if the test passes or fails.

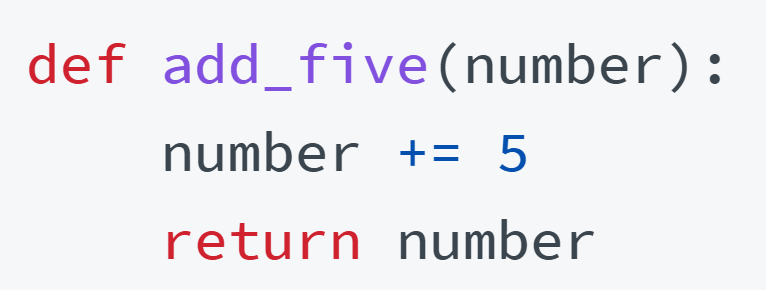

Let me give you a simple Python example. Imagine students need to write a function named add_five() that takes an integer as a parameter and returns 5 plus the parameter value.

This might be the student’s solution:

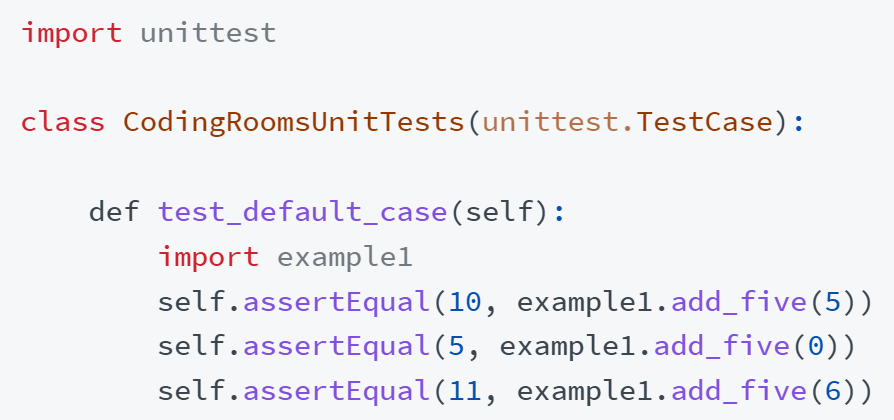

We can test the student’s solution using a unit test like this:

Custom script tests

If you need even more control and power when testing student code, you can always design your own shell script. The compare output tests and unit tests described above help you easily create logic to determine if a student’s code passes or fails your test. By creating your own shell script, you have full control over the logic; determining what is a pass/failure; how many points to assign students; and the feedback you want to give.

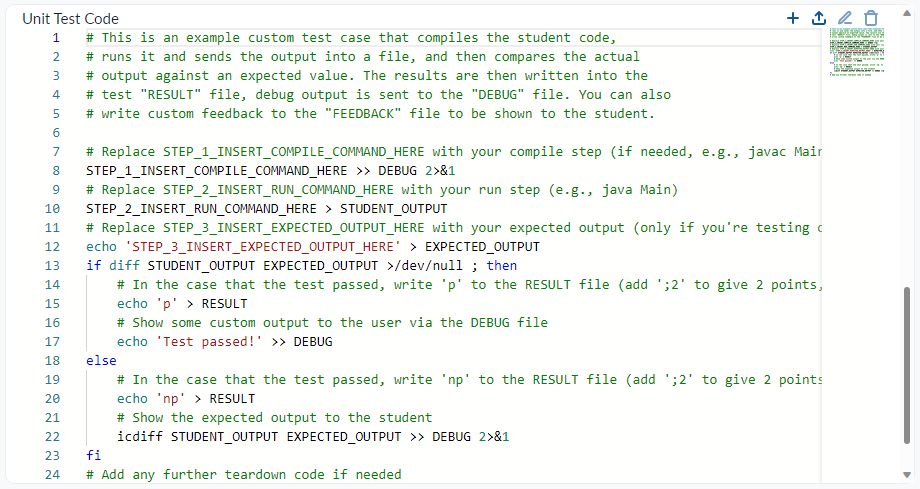

Bash script test

In the image above, the script writes to different files to display debugging information, display feedback, and determine if a test passes or not. For example, you write “p” to the RESULT file to identify if the student’s code passes tests. You can also assign points, for example, two points, by writing “p;2” to the RESULT file.

You can also bundle additional files with the test, like unit test code, data files, or executables. This allows you to add the files you need and fully build the test case you want.

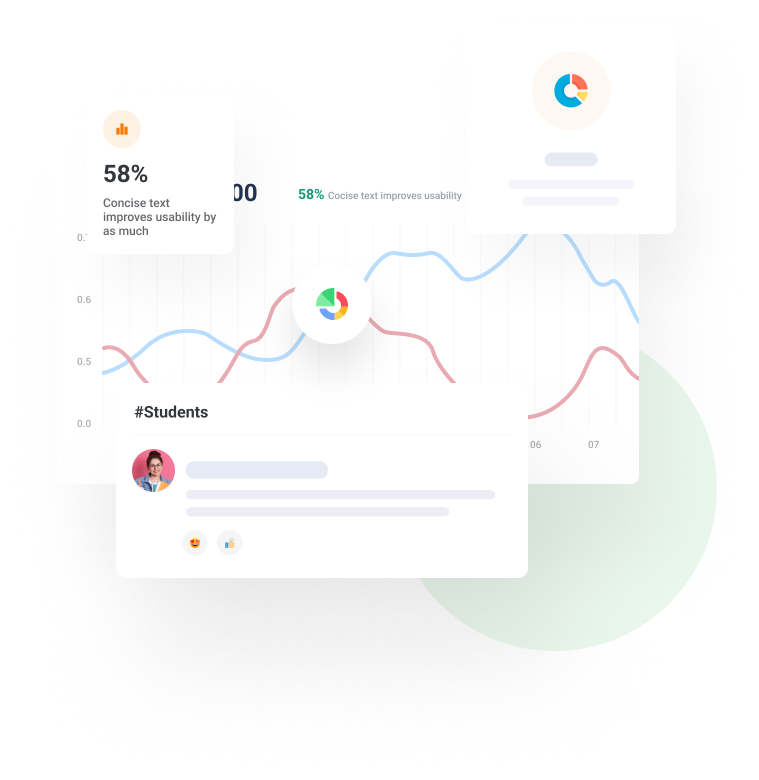

Advanced zyLabs

Here at zyBooks, I’m excited to share that we just launched our brand new Advanced zyLabs for beta release. Advanced zyLabs offers a fully professional cloud IDE environment that runs on any browser and device, and much more. The three autograding approaches we covered above are also fully integrated into the lab, giving you powerful options.

If you’re interested in becoming a beta user of Advanced zyLabs click here.

Final thoughts

I hope you found this autograding primer helpful! I’m curious to know how you’re using autograding in your own classroom. I’d welcome your thoughts, comments and insights on that, or anything else related to autograding! Email me at officehours@zybooks.com.

Autograding in Action

In this video, zyBooks’ Lead Product Developer and autograding authority Joe Mazzone demonstrates the three autograding approaches using our Advanced zyLabs application: